Code-Switching论文

==Zhou, Xinyuan, et al. “Multi-encoder-decoder transformer for code-switching speech recognition.” arXiv preprint arXiv:2006.10414 (2020).==citations:15

==Zhang, Shuai, et al. “Reducing language context confusion for end-to-end code-switching automatic speech recognition.” arXiv preprint arXiv:2201.12155 (2022).== citations:1

==Zhang, Haobo, et al. “Monolingual Data Selection Analysis for English-Mandarin Hybrid Code-switching Speech Recognition.” arXiv preprint arXiv:2006.07094 (2020).==citations:1

==Yue, Xianghu, et al. “End-to-end code-switching asr for low-resourced language pairs.” 2019 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU). IEEE, 2019.==citations:17 北理工

思想

- We first incorporate a multi-graph decoding approach which creates parallel search spaces for each monolingual and mixed recognition tasks to maximize the utilization of the textual resources from each language.

- 用LSTM LM的中英文文本训练的LM预训练模型,做rescore

- 本文数据来源:http://corporafromtheweb.org ,训练RNN LM通过:https://github.com/yandex/faster-rnnlm

==Winata, Genta Indra, et al. “Meta-transfer learning for code-switched speech recognition.” arXiv preprint arXiv:2004.14228 (2020).== 香港科技大学 citations:26

思路

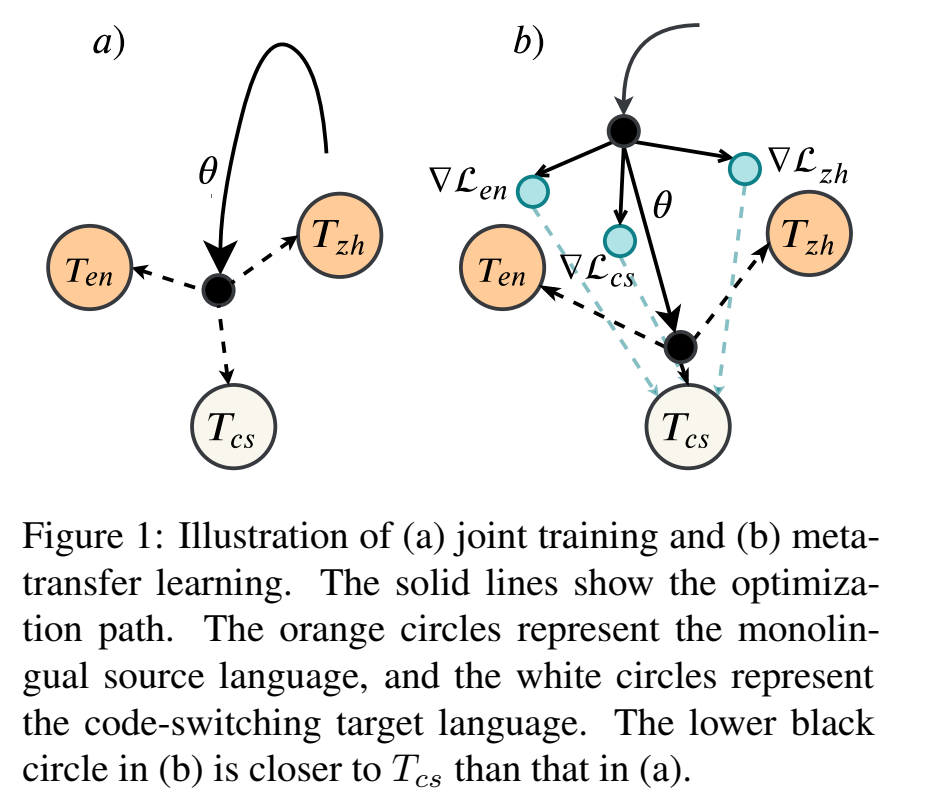

- 提出一种新的学习方法,叫meta-transfer learning,通过明智地从高资源的单语言数据集中提取信息,在低资源设置的code-switched语音识别系统上进行迁移学习 ;

- 模型学习识别单个语言,并通过对code-switched数据进行优化来迁移它们,以便更好地识别混合语言语音。

- code-switching asr任务的挑战:1.数据稀缺;2.难以捕获不同语言中相似的音素;

- 过去解决code-switching asr方法分为两类:

- 从单语资源生成合成语音数据;但是无法保证生成自然的语码转换语音或文本;

- 用大量单语种的数据训练一个预训练模型,并利用有限的code-switched数据对模型进行微调,但是从单语种抽取得还不够,无法学到充分的知识,并且模型也会忘记之前学过的单语种任务;

- meta-transfer learning 是 model agnostic meta learning (MAML) (Finn et al., 2017) 的扩展;

==Indra Winata, Genta. “Multilingual Transfer Learning for Code-Switched Language and Speech Neural Modeling.” arXiv e-prints (2021): arXiv-2104.== citations:4

==Aguilar, Gustavo, and Thamar Solorio. “From English to code-switching: Transfer learning with strong morphological clues.” arXiv preprint arXiv:1909.05158 (2019).== citations:21

==Bai, Junwen, et al. “Joint unsupervised and supervised training for multilingual asr.” ICASSP 2022-2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2022.== citations:7